How ChatGPT is Changing the Game in Data Analytics!

This weekend, I had the opportunity to subscribe to "ChatGPT Plus." I spent some time exploring how AI, like ChatGPT, will change the way we work with our data.

Currently, I am working on a large enterprise financial transformation project. So - why not starting with a simple order intake to end-up getting the Profit & Loss statement from ChatGPT?

The results were astonishing, which made me wonder if we should rethink the way we do data and analytics?

When reading through my chat with ChatGPT3 - best first to look at the screenshots and than reading my comments. I have described each steps, all screenshots are all from the same conversation.

At the end of my summary, I will try to look ahead 1-2 years and determine what kind of decisions we can make based on this understanding.

A real world scenario from "Selling Bananas" to "Profit and Loss"

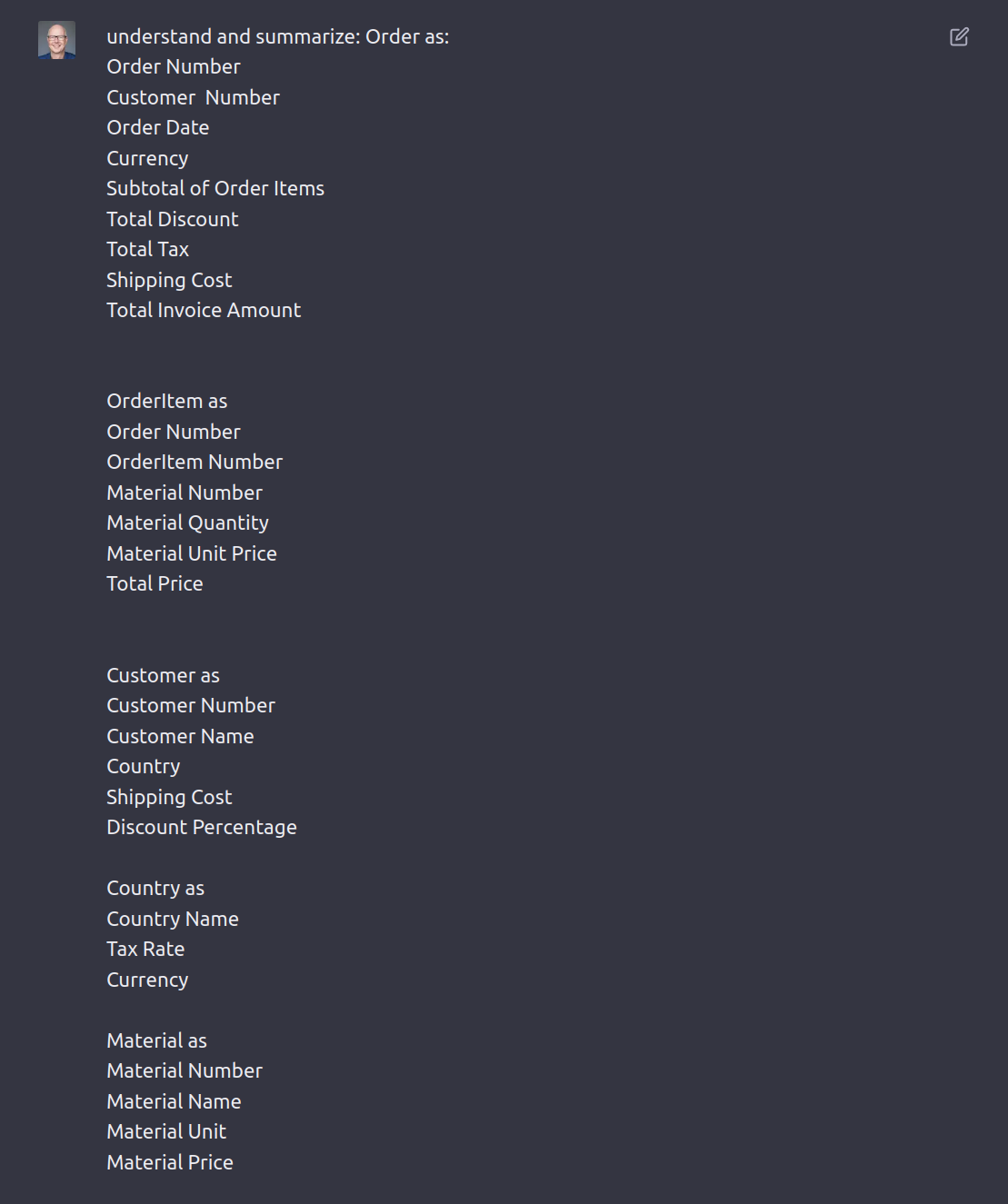

The scenario I want to run is a simple sales process, to start I am giving ChatGPT the prompt or initial context for our conversation. To let him understand that we are talking about orders, order-items material and customers.

But it could be any other scenario or data structure.

What does it mean?

ChatGPT3 is a chatbot that can keep the context of a conversation, this are up to 4000 token (words can be 1-3 token). Once a conversation is longer than this token, he "forgets" the beginning token(FIFO), this is a setting is compromising performance and result quality. For our experiment that is enough.

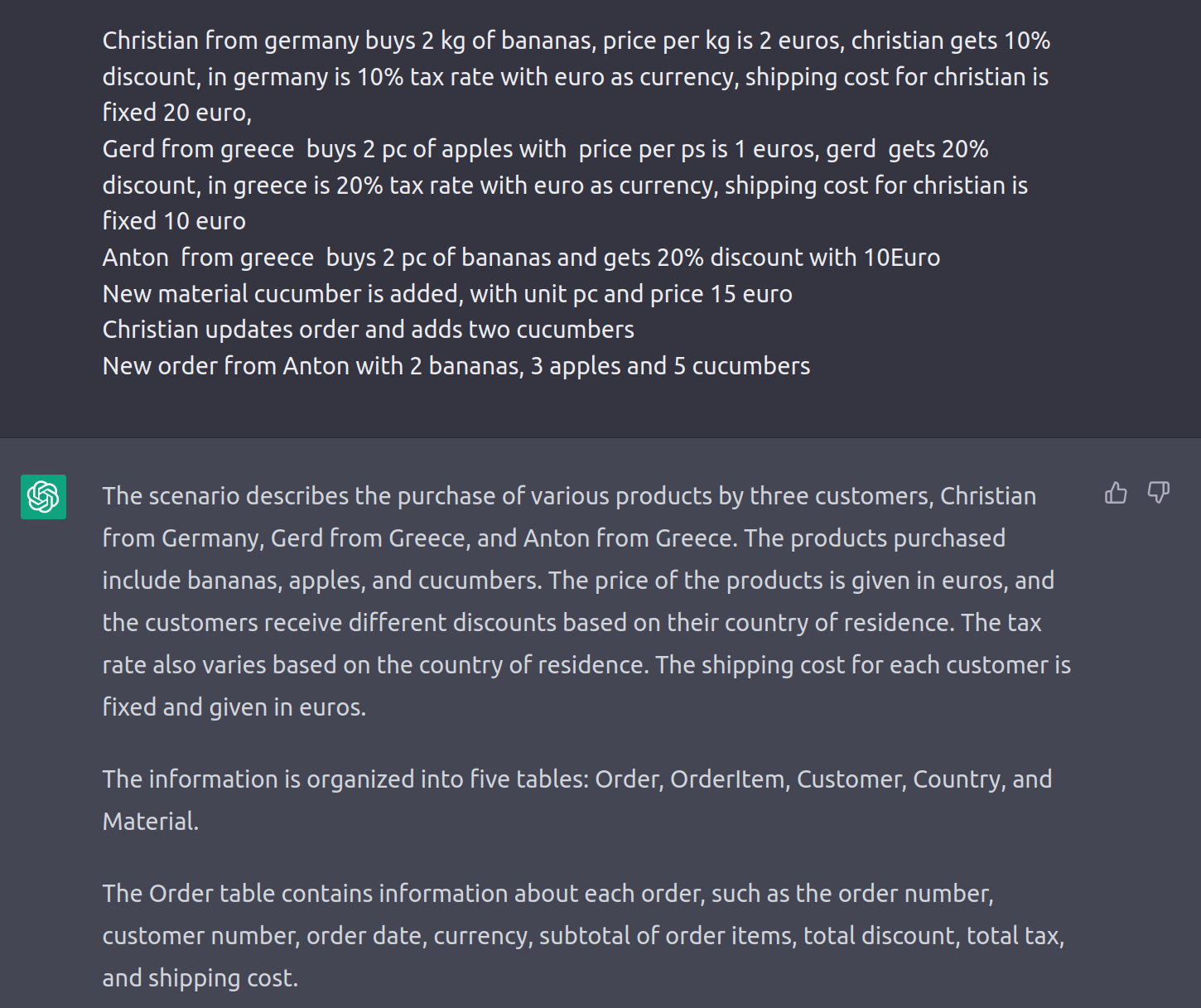

Enter Sales Transactions as "Free Text"

I found blasting - I am providing free text information on some sale scenarios, just indicating what could be transactional or masterdata - and the KI puts this information in the right context and knows which of the free-text information belongs to which object (see next window for more details)

What does it mean?

When loading new information into any system - this requires to understand the business context of the data and also the structure of the data in the DataLake / Database. In this scenario the "world knowledge" of ChatGPT made it understand the business context and the prompt information gave the structural information.

Show me the tables!

I asked ChatGPT to present this data in tables - to validate if it had the right understanding - just enjoy how this works.

Its worth to notice that the concept of master-data is understood and even IDs a matching as needed for a relational database. I believe that happened as in my initial prompt was indicating a relational data structure.

What this means?

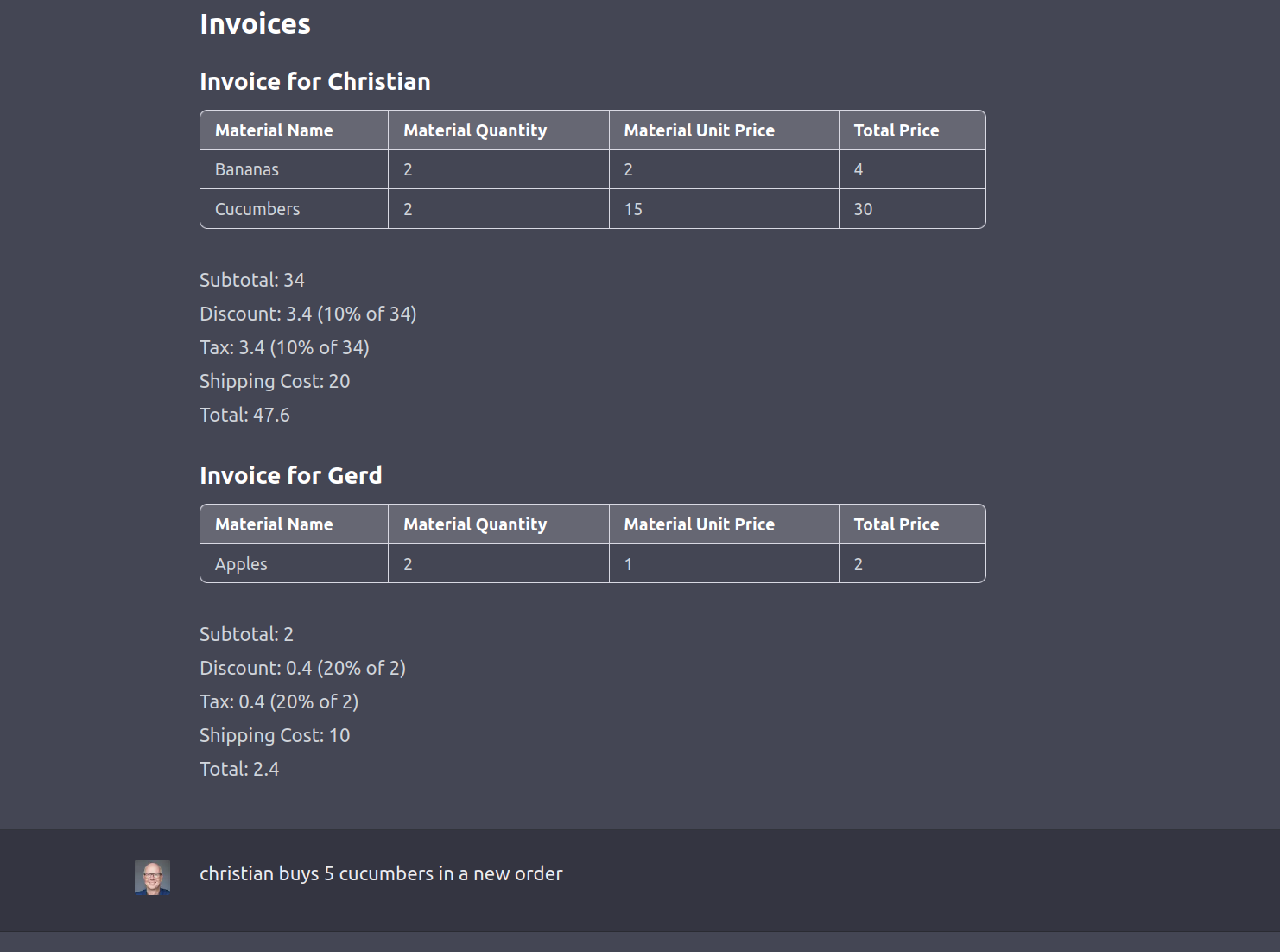

I tried some more scenarios, like "Christian buys the same again" - and it got right all the time - basically anybody with business context is able to load data into a lake. Considering ChatGP3 speaks multiple languages - its the concept of one interface to the data.

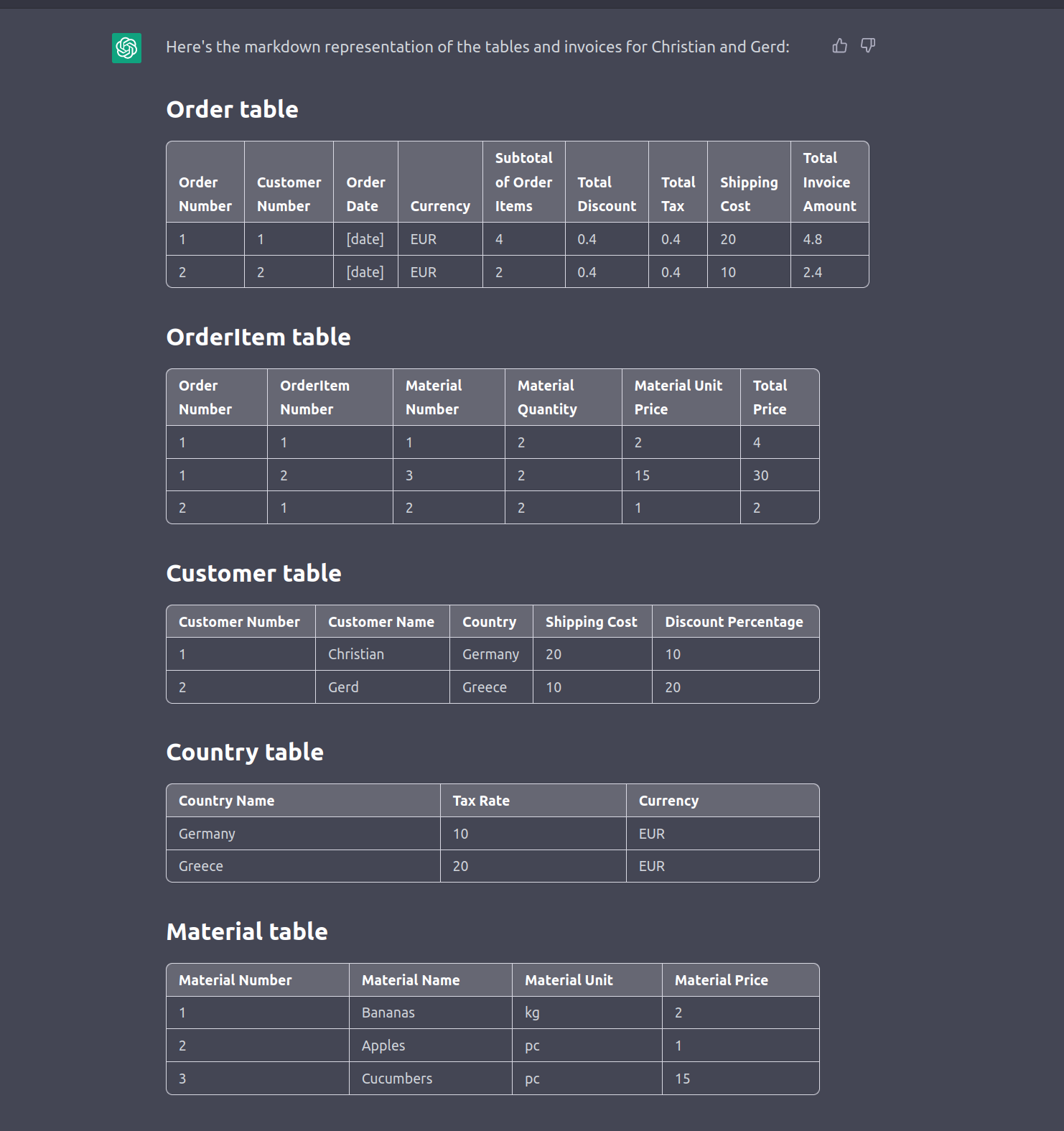

Print an Invoice

I asked to generate an invoice based on the information provided - and the results looks nice. In addition (in another chat) I asked to add certain details - and the invoice layout was adjusted.

What does it mean?

ChatGPT has "learned" a big part of the internet, it read "all books" and now has an memory that somehow represents the common thinking (in topics with a lot of reference material)

This common standard was applied to the invoice, so the result is an invoice as -most- people would expect to see. This will not fulfill every bodies need, but a user could refine the layout and the logic in this conversation.

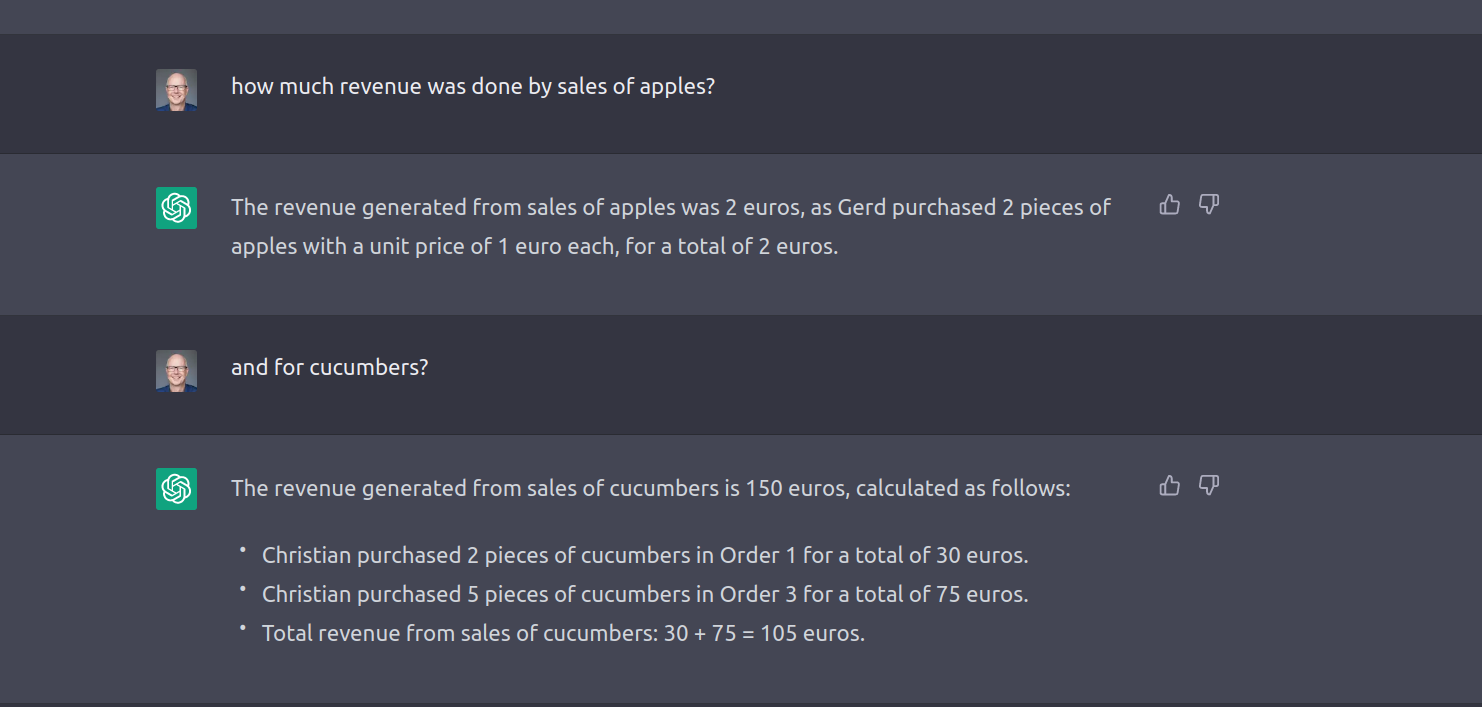

Run the Analytics

I was interested on the revenue of my little business - so why not just asking for it.

I tried other examples, "Revenue of Fruits" - if there would be regions - you could ask "Revenue in EMEA" or "Total Tax sold on Bananas" - returned answers work out.

What does it mean?

As you see - the answer was provided , again "revenue" was not in the original data model - the answer was coming from the conversational context (data structure, sales information ) and the common knowledge.

You will also notice, that there is explanation in the answer - that makes it better understandable how the results have been calculated. Its possible to ask more details on each step.

Adding COGS and OPEX

Moving away from sales to get my "Profit and Loss" - I needed to add COGS and OPEX calculation to my sales - this will require to add new data, but also requires updates in the data structures.

What does it mean?

One sentence "OPEX expenses are 5 Euros per order" resulted in 3 steps:

- adjusting the table structure

- calculating the values based on the formula

- updating the existing data

This is already a small IT project 🙂

ChatGPT is seeing data structure, content as "one input" - hence it will try to keep all all above elements consistent and aligned. What is a difficult task in an analytics project comes naturally here.

(*) Please note that this process does not really make full sense from financial perspective!

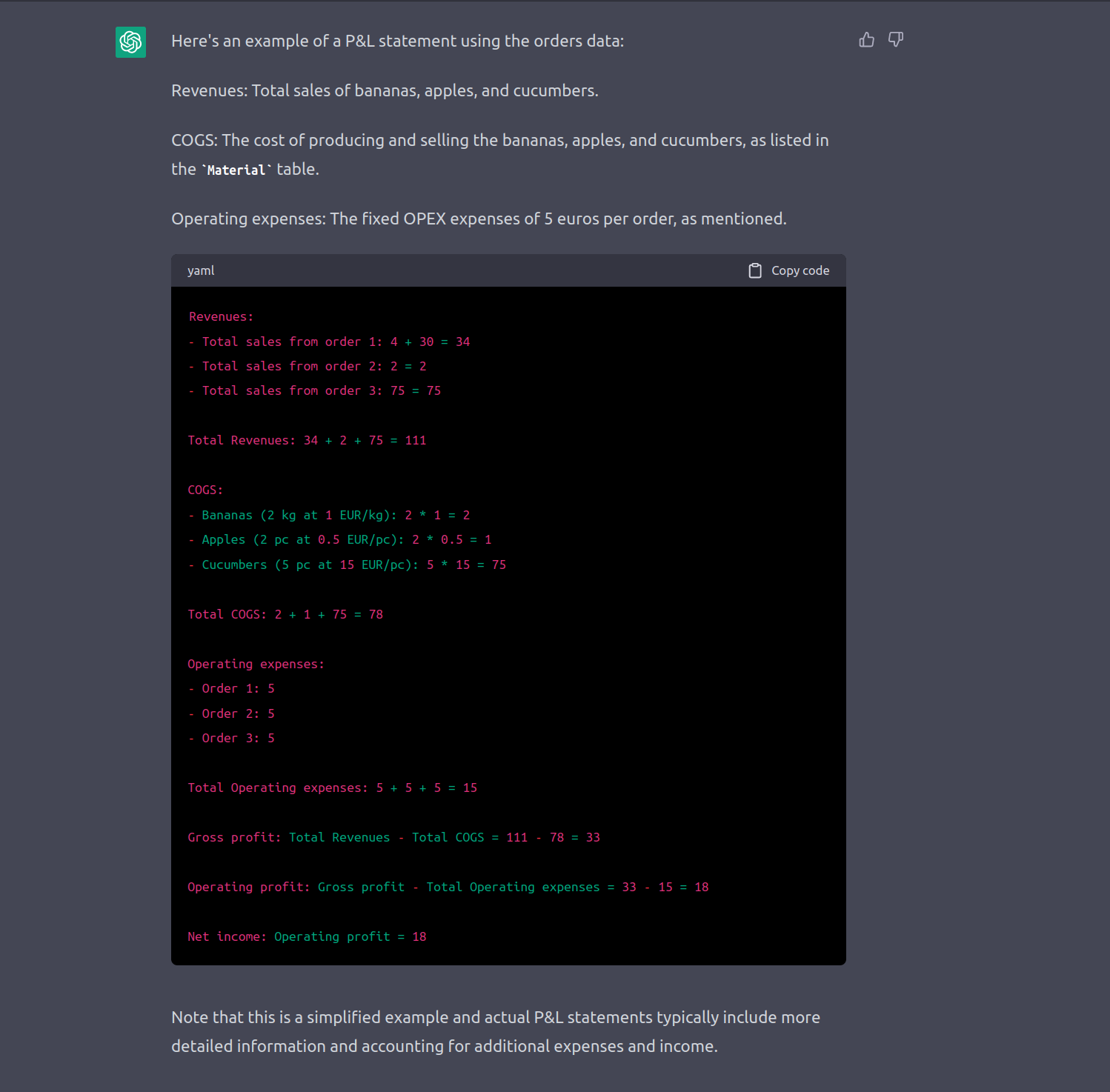

Profit and Loss Statement

I asked to get the P&L Statement - and got it.

I tried without having COGS and OPEX - and ChatGPT complained - that is not enough to build a statement.

What does it mean?

Again this is a combination from world - knowledge and conversational knowledge. The interesting part is,that we moved from sales to finance and the data now is in a different context. Use of a database is not driven by its original design, but by the context and questions asked.

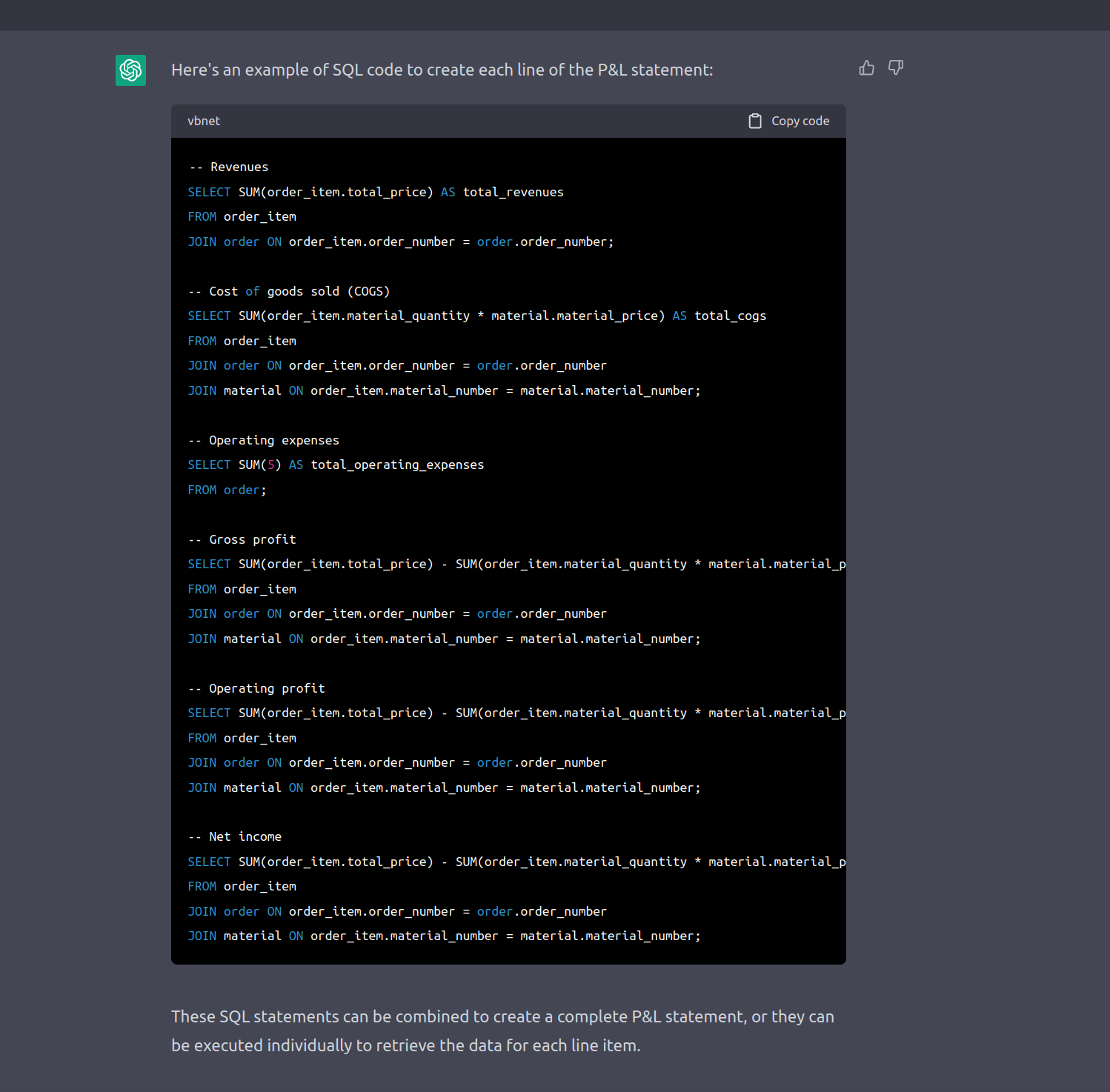

Build and Application

Because conversational knowledge is restricted, its a good idea to conserve it in code/sql statements. I must admit I did not check those statements. I did checks for other scenarios and I found the quality pretty good, some joins would have taken me a lot of time to build.

What does it mean?

SQL Statements or programs can be generated by ChatGPT and it is really, really good in it. That statements can be seen as the persisted knowledge - I way to get the outcome documented and re-useable. A normal user query can be just executed by ChatGPT, something like an P&L statement should be based on a fixed, approved set of statements. Both ways are possible.

BIG CHANGE AHEAD

Revolutionizing Data and Analytics: Envisioning the Future of AI that Combines General and Domain-Specific Capabilities for Unmatched Power"

Sam Altman, the CEO of OpenAI, envisions a near future where custom-built/individual AI will be common and can have custom characteristics or knowledge.

I envision a domain specific Data and Analytics AI enriched with common world knowledge, that consists of three components:

- Validated common world understanding (common layer)

- Validated subject matter expert knowledge (e.g. validated finance rulesets, US GAAP, SEC, Medical Research). This rulesets could be tailored to a scope (research area) , a process (sales) or an application, like SAP BW or CFIN.

- Prompt Knowledge: Understanding of the specific details of a data lake or structure, which provides the overall framework for storing the data and gives direction to build the overall design. Similar to the prompt that has been used in previous examples, the prompt could also be the knowledge of a legacy system that needs to be used.

Companies will start selling these AI as bundles, combining 1. and 2. and helping the customer with setting up the prompt knowledge.

AI is already used in analytics, and it is already possible to ask questions in natural language that get translated into a dashboard, but I believe this will be on a different level.

Different Way of Working

Working with these platforms will be different, more flexible, and with less cost for changes and updates. It will require distinguishing between repeatable/fixed information still boiled in a fixed structure and a spontaneous query by a user.

It is not clear how the future will be, but it will not remain the same. Given the current speed of development and the huge impact of AI in Data and Analytics, it will change, with new tools and new ways of using/accessing data.

When making significant investments in Data and Analytics, this revolution should be kept in mind.