Last Updated 4 years by cneuhaus

In this short post I summarize my development environment using Platformio & Clion. I have adjusted platformio.ini file to include libraries and files needed – so you can start. EdgeImpulse provides a similar setting that fits when you are working with the Arduino IDE.

Background

I am using Platformio as development platform for embedded projects and CLION as my IDE. Both are integrated and together they provide a consistent way for programming, compiling and deployment – independent from the MCU/CPU used. I try to use the Arduino Libraries- as to a certain degree the provide platform independence. In addition many libraries are available for Arduino. To facilitate the Machine Learning I rely on Edgeimpulse – an cloud platform that supports the e2e machine learning cycle – so in a nutshell:

- Platformio – Platform for embedded development

- Clion – Integrated Development Environment with Platformio Integration

- EdgeImpulse – Development platform for machine learning on edge devices

The magic salt in the soup: EdgeImpulse

EdgeImpulse takes away the two most complicated parts: The model design/training and the feature extraction. Its a science on its own to run a continuous inversion process on an small MCU (e.g ESP32) that includes recording the voice, running a Fourier transformation, applying a MEL transformation and finally to feed the features into the model. As the feature extraction during training (running on the cloud or on a desktop PC) and later during inversion (running on the ESP) must follow the exact same rules from logic AND timing persective- this adds additional complexity for embedded ML projects. Syntiant has partnered with EdgeImpulse – so the feature extraction and inversion process has been tailored for the NDP 101 – there is no need to deep dive into its architecutre.

So..what do I get and how do I build actually something?

Once training is completed (Detailed description from EdgeImpulse) EdgeImpulse gives you two options to download the software on your device: You can directly download a binary image and test your model, or – and here its getting really interesting – to download source code that contains:

- Feature Extraction SW (DSP…)

- Trained Model

- Model Inversion (feed feature into model and run the inversion)

- Library to integrate the “model decision=inversion result” into the c-code

- Example program using all above based on Arduino framework – actually showing its working

- For NDP101 it also contains the libraries to directly communicate to the NDP101 via SPI commands (load model, run model…..). I am still exploring the different commands and options

SourceCode – Ready To Go Package on GitHub

My initial template version for this configuration is available on GitHub https://github.com/happychriss/Goodwatch_NDP101_SpeeechRecognition

To build this I have downloaded the Syntiant/EdgeImpulse repository and adjusted the file structure to the Platformio build system, in addition I have adjusted the platformio.ini file to include needed libraries.

Please note that you will need to patch one of the libraries, the baseline for my work and also instructions how to patch the local libraries can be found here.

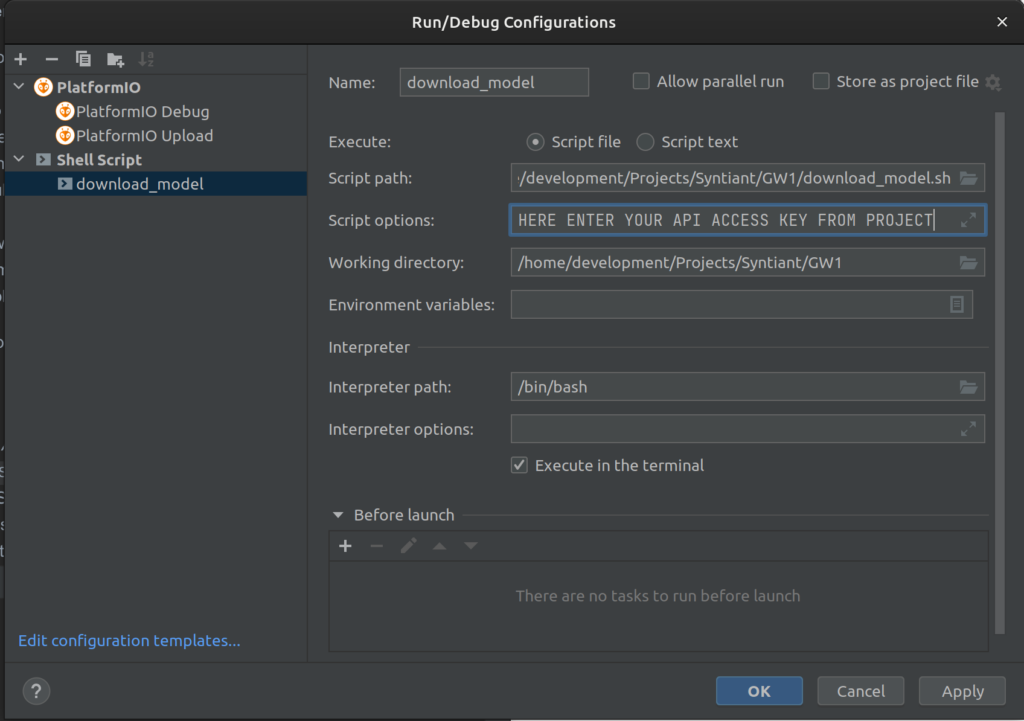

A nice goody is the script download_model.sh – that connects to EdgeImpulse downloads a *build* model and puts the files in the right folders (./src/edgeimpulse) – so you can build your binary fresh from the model and upload to the board. You have to add a run-configuratio in CLION, so you can start the script directly. The API code for authorization on EdgeImpulse needs to be added to the paramerters.

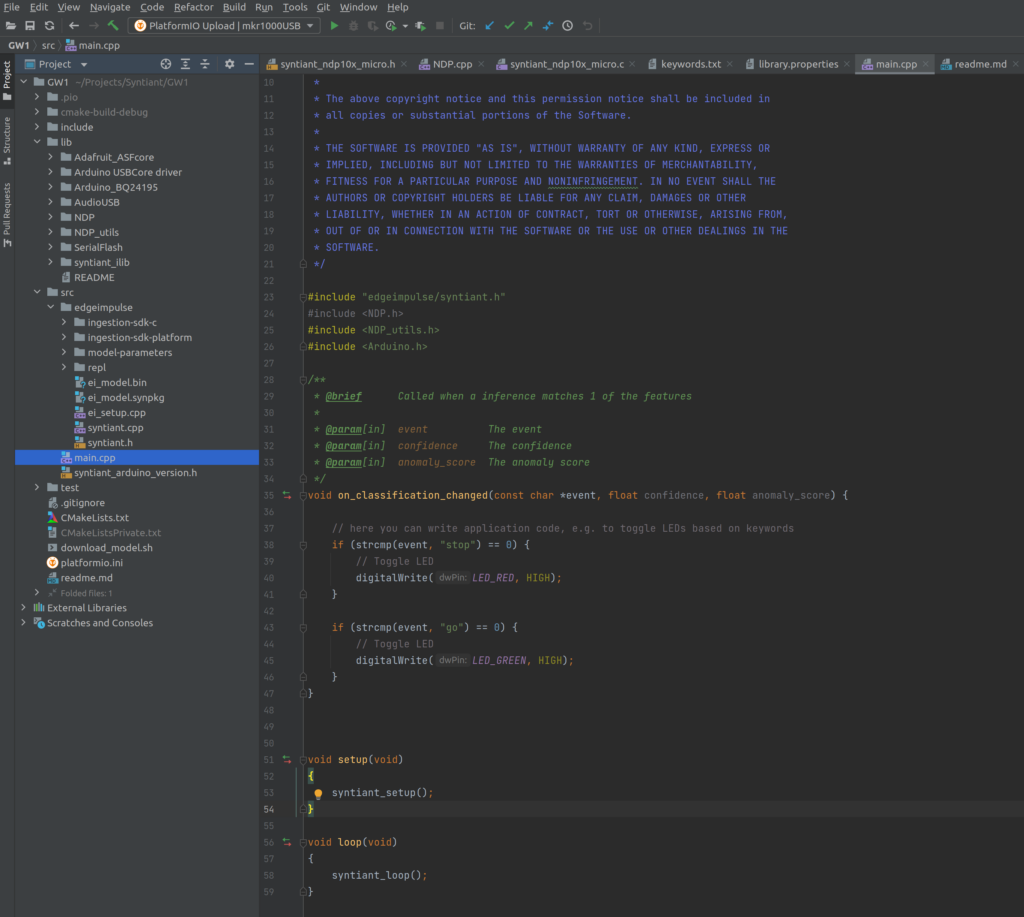

This is, how it looks like – when all is set-up. Enjoy developing.

0 Comments